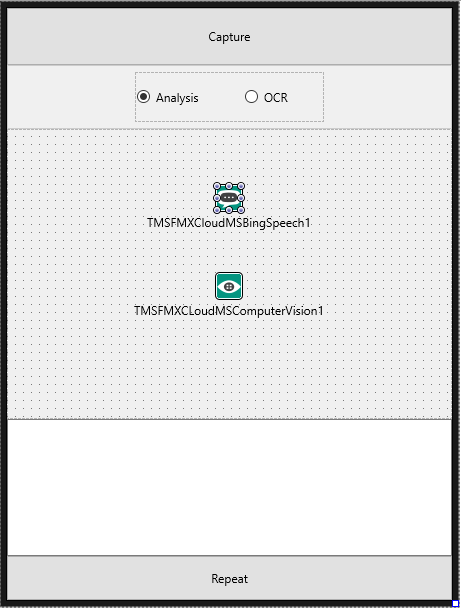

We released earlier this week a major update of the TMS FMX Cloud Pack. This new version adds a lot of new components covering seamless access to all kinds of interesting cloud services. Among the new services covered, two services from Microsoft stand out and open up new ways to enrich our Delphi applications with cool features. In this blog, I wanted to present the Microsoft Computer Vision and Microsoft Bing speech service. Our new components TTMSFMXCloudMSComputerVision and TTMSFMXCloudMSBingSpeech offer instant and dead-easy access to these services. Powered with these components, the idea came up to create a small iPhone app that let's vision impaired people take a picture of their environment or a document and have the Microsoft services analyze the picture taken and let Microsoft Bing speech read the result.

So, roll up your sleeves and in 15 minutes you can assemble this cool iPhone app powered with Delphi 10.1 Berlin and the TMS FMX Cloud Pack!

To get started, the code is added to allow taking pictures from the iPhone. This is a snippet of code that comes right from the Delphi docs. From a button's OnClick event, the camera is started:

if TPlatformServices.Current.SupportsPlatformService(IFMXCameraService,

Service) then

begin

Params.Editable := True;

// Specifies whether to save a picture to device Photo Library

Params.NeedSaveToAlbum := false;

Params.RequiredResolution := TSize.Create(640, 640);

Params.OnDidFinishTaking := DoDidFinish;

Service.TakePhoto(Button1, Params);

end

When the picture is taken with the camera, the DoDidFinish() method is called, so we need to add the code there to deal with the image taken. We'll cover that in a moment. Let's first setup now the TMS FMX Cloud Pack components TTMSFMXCloudMSComputerVision and TTMSFMXCloudMSBingSpeech to consume the needed cloud services. You can download the components from the TMS FMX Cloud Pack page. After installing, over 60 new cloud service components will be added to your tool palette. It will be needed to get an API key to be allowed using these Microsoft services. Go to https://www.microsoft.com/cognitive-services/ and signup for free for the computer vision API and Bing speech API and you'll receive an API key for each. In the app, two constants are used and the component's app key property is initialized when the app starts:procedure TForm1.FormShow(Sender: TObject); begin TMSFMXCloudMSBingSpeech1.App.Key := MSBingSpeechAppkey; TMSFMXCLoudMSComputerVision1.App.Key := MSComputerVisionAppkey; end;Now that these components are also ready to be used, let's complete the DoDidFinish() method that is triggered when the picture is taken. This method returns the image taken. This image is saved locally and will be submitted to the Microsoft computer vision API for analysis. In the app, we added via a radiobutton the choice between regular image analysis or OCR.

So, a TTask is used to start this analysis with the call TMSFMXCLoudMSComputerVision1.ProcessFile(s, cv). A TTask is used to avoid that the UI is locked during this analysis, after-all, the image must be submitted to Microsoft, processed and the result returned and parsed, so this can take 1 or 2 seconds. Depending on the analysis type, the result is captured as text in a memo control. After this, we connect to the Bing speech service.

procedure TForm1.DoDidFinish(Image: TBitmap);

var

aTask: ITask;

s: string;

cv: TMSComputerVisionType;

begin

CaptureImage.Bitmap.Assign(Image);

// take local copy of the file for processing

s := TPath.GetDocumentsPath + PathDelim + 'photo.jpg';

Image.SaveToFile(s);

// asynchronously start image analysis

aTask := TTask.Create (procedure ()

var

i: integer;

begin

if btnAn0.IsChecked then

cv := ctAnalysis;

if btnAn1.IsChecked then

cv := ctOCR;

if TMSFMXCLoudMSComputerVision1.ProcessFile(s, cv) then

begin

Description := '';

if cv = ctAnalysis then

begin

// concatenate the image description returned from Microsoft Computer Vision API

for I := 0 to TMSFMXCLoudMSComputerVision1.Analysis.Descriptions.Count - 1 do

begin

Description := Description + TMSFMXCLoudMSComputerVision1.Analysis.Descriptions[I] + #13#10;

end;

end

else

begin

Description := TMSFMXCLoudMSComputerVision1.OCR.Text.Text;

end;

// update UI in main UI thread

TThread.Queue(TThread.CurrentThread,

procedure ()

begin

if Assigned(AnalysisResult) then

AnalysisResult.Lines.Text := Description;

end

);

TMSFMXCloudMSBingSpeech1.Connect;

end

else

begin

// update UI in main UI thread

TThread.Queue(TThread.CurrentThread,

procedure ()

begin

AnalysisResult.Lines.Add('Sorry, could not process image.');

end

);

end;

end

);

aTask.Start;

end;

In the TMSFMXCloudMSBingSpeech.OnConnected event, we can then send the text for speech synthesis to the Microsoft service and as a result we retrieve an audio stream that is then played through the device.procedure TForm1.TMSFMXCloudMSBingSpeech1Connected(Sender: TObject);

var

st: TMemoryStream;

s: string;

begin

st := TMemoryStream.Create;

s := AnalysisResult.Lines.Text;

try

TMSFMXCloudMSBingSpeech1.Synthesize(s, st);

TMSFMXCloudMSBingSpeech1.PlaySound(st);

finally

st.Free;

end;

end;

So, it doesn't take much more than this to enhance the life of vision impaired people a little and let the iPhone read out what is around them or help with reading documents.Now, let's try out the app in the real world. Here are a few examples we tested.

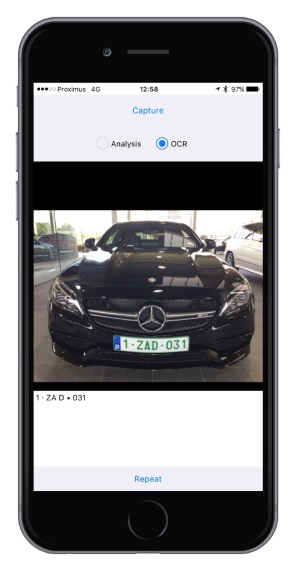

Using the app on the road to read road signs and capture car license plates

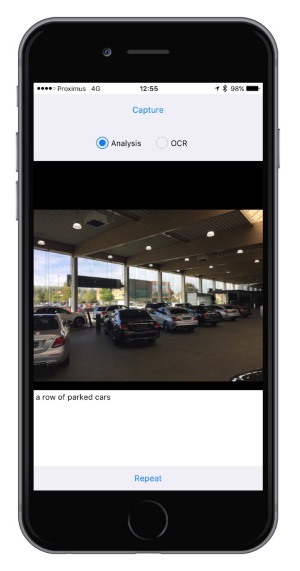

Trying to figure out what we see in a showroom

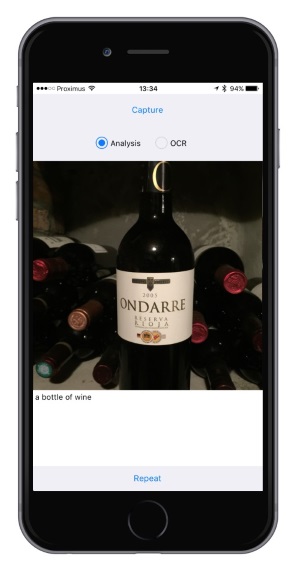

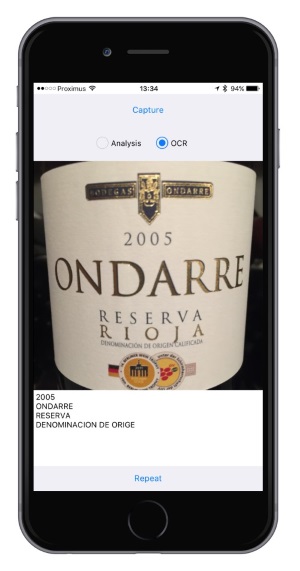

First the app analyzed correctly this is a bottle of wine in the cellar and is then pretty good at reading the wine bottle label.

You can download the full source code of the app here and have fun discovering these new capabilities.

Enjoy!